Ken Satoh

Compliance Check of Norms for Algorithmic Law

アルゴリズム法に対する規範遵守検査手法の開発

Vérification de la conformité des normes pour le droit algorithmique

2022/06/15

English Summary

The government now uses computer programs for a decision making for public affairs (called "algorithmic law") and it raises great concern about checking whether the algorithmic lawrespects human rights. In this research, logical inference for conflict detection will be generalized for compliance check of norms for algorithmic law.

Japanese Summary

近年、政府が公務における意思決定を行う際にコンピュータプログラムを使うようになっている。(これを「アルゴリズム法」と呼ぶ)。このようなアルゴリズム法の使用が人権を遵守しているかが大きな問題となる。本研究では、矛盾検出のための論理的推論を一般化し、アルゴリズム法の規範チェック手法を開発する。

French Summary

Le gouvernement utilise désormais des programmes informatiques pour une prise de décision dans les affaires publiques (appelée "droit algorithmique") et il s'inquiète beaucoup de vérifier si le droit algorithmique respecte les droits de l'homme. Dans cette recherche, l'inférence logique pour la détection des conflits sera généralisée pour la vérification de la conformité des normes du droit algorithmique.

Overview

Along with advances of artificial intelligence into society, a situation has emerged in which artificial intelligence is closely related to the real world and legal and ethical problems caused by artificial intelligence naturally occur. In particular, if AI plays a role in decision making, it may cause a great harm to humans. Such problems have been actually occurring.

- Facebook had a strategy of advertisements in consideration of race and gender [1] which deprives from excluded people a chance of watching these advertisements and enhancing their life to buy these products.

- The US recidivism prediction system, COMPAS, has been used to calculate the offense prediction rate of offenders for bail decisions since 1989. Recently, there is a doubt that racial consideration would be made in this recidivism prediction [2].

- Student clearinghouse called Admission Post Bac (APB), in France allocates students to universities, preparatory schools, and technical high schools. There is, however, no disclosure about decision making process. A trial was made to open the strategy to the public by a trial, and as a result of the analysis, a serious suspicion in decision making was revealed in another trial [3].

One way to solve these problems is for governments to monitor such AI systems. However, if the decision making is made by governments themselves, check for these decision making might be loosely done. So more effective way is to expose such AI systems to the public, especially for AI decision making systems used by governments. This kind of AI systems by which governments replace human officials can be called “algorithmic law” since these decisions made by the AI system will be legally effective to enforce people. Along with frequent uses of such AI systems, algorithmic law will be a great matter for civil rights. Therefore, civil control of algorithmic law should be prepared urgently. In fact, in France, a lawsuit to ask government to publish a specification of algorithmic law has been invoked to require government agencies to publish the software, and as a result, various software was released [4].

However, there is a serious technical problem about this check of algorithmic law. Currently, analysis of software code is done manually. However, according to increasing number of such software, it would be very difficult to find a problem by human. For this reason, in this collaboration, automatic compliance check of legal and ethical norms for algorithmic law should be investigated.

In this research, the compliance of legal and ethical norms for algorithmic law will be checked by describing compliance rules imposed to these AI systems and a specification of the AI systems used by governments in logic and generalizing conflict detection proposed in [5,6].

The goals of this research are as follows:

- Logical inference for conflict detection will be generalized for compliance check of legal and ethical norms for algorithmic law.

- As a specific application, compliance check of government’s AI decision making system which handles private data with GDPR (General Data Protection Regulation) is considered. For a generalization, it is necessary to handle abstract normative terms such as freedom of speech and privacy related with fundamental human rights rather than concrete legal terms of contract violation which was the original aim of our conflict detection and resolution method. It is because in algorithmic laws, conflicts exist with such fundamental human rights since the algorithmic laws usually restrain fundamental human rights to achieve public security. Advices from legal scholars will be taken for how to manipulate these abstract terms.

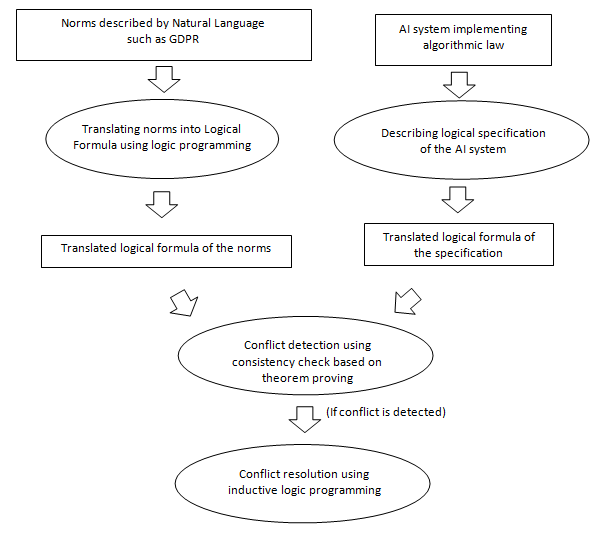

The process of the compliance check can be seen at Figure 1. Firstly, laws to protect fundamental human rights like GDPR will be translated into a logical formula. However, as said before, it is necessary to consider how to formalize abstract normative terms in laws and ethics since the correspondence between a violation of abstract normative terms and real life events has a big gap. For example, GDPR imposes an “appropriate measure” to secure private data against non-related third party. But how to define an appropriate measure logically would be very difficult. To solve this problem, advices from legal scholars will be needed to get plausible definition of abstract normative terms and then the definition is refined using inductive logic programming [8]. In the inductive logic programming system, an uncovered case for an abstract normative term is detected, the system searches another possible rule set to cover case automatically. Simultaneously, a specification of the AI system implementing algorithmic law is translated into a logical formula. It is expected that there will be a rigorous specification of the AI system since otherwise the decision making process itself would be ill-defined process by the government. Once a rigorous specification is obtained, it would be easy to translate it into a logical formula.

Then, a conflict between norms and the specification can be detected. Given AI systems behavior, it will be checked whether constraints posed by laws are satisfied or not using theorem proving technique. If there is a violation of constraints, logical reasoning step is traced, the part of the specification for a source of violating the constraints is identified.

Once a conflict is found, it is necessary to revise the specification of the AI system. We use inductive logic programing again to avoid conflicts [7,8]. For example, if there are some conditions in a rule in the AI system to make a violated decision, then the inductive logic programming system will add more restricted conditions to avoid making such a violated decision.

By achieving the above research goal, algorithmic laws become more compliant with fundamental human rights. This is a quite important to make future government more democratic and also transparent since this kind of logical framework gives a clear explanation of where problem exists in the algorithm and an appropriate revision of the algorithmic law.

Figure 1 Process of Conflict Detection and Resolution

References

[1] Till Speicher, Muhammad Ali, Giridhari Venkatadri, Filipe Nunes Ribeiro, George Arvanitakis, Fabrício Benevenuto, Krishna P. Gummadi, Patrick Loiseau & Alan Mislove, "Potential for Discrimination in Online Targeted Advertising", Proceedings of Machine Learning Research 81 (2018)

[2] https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

[3] Tribunal administratif Bordeaux (Bordeaux Administrative Court), req. No. 1504236, Jun. 16, 2016.

[4] https://www.impots.gouv.fr/portail/ouverture-des-donnees-publiques-de-la-dgfip

[5] "Conflict Detection in Composite Institutions", Li, T., Balke, T., De Vos, M., Ken Satoh, Padget, J. A., Proc. of the Second International Workshop on Agent-based Modeling for Policy Engineering (AMPLE 2012), 75-89 (2012).

[6] "Legal Conflict Detection in Interacting Legal Systems", Li, T., Balke, T., De Vos, M., Padget, J. A., Ken Satoh, Proceedings of JURIX 2013, 107-116 (2013).

[7] "A Model-Based Approach to the Automatic Revision of Secondary Legislation", Li, T., Balke, T., De Vos, M., Padget, J. A., Ken Satoh., Proceedings of the 14th International Conference on Artificial Intelligence & Law (ICAIL 2013), 202-206 (2013).

[8] "Resolving Counterintuitive Consequences in Law Using Legal Debugging", Fungwacharakorn, W., Tsushima, K., Satoh, K., Artificial Intelligence and Law, to appear (2021). |